Text Generation LSTM Network

March 2021 - April 2021

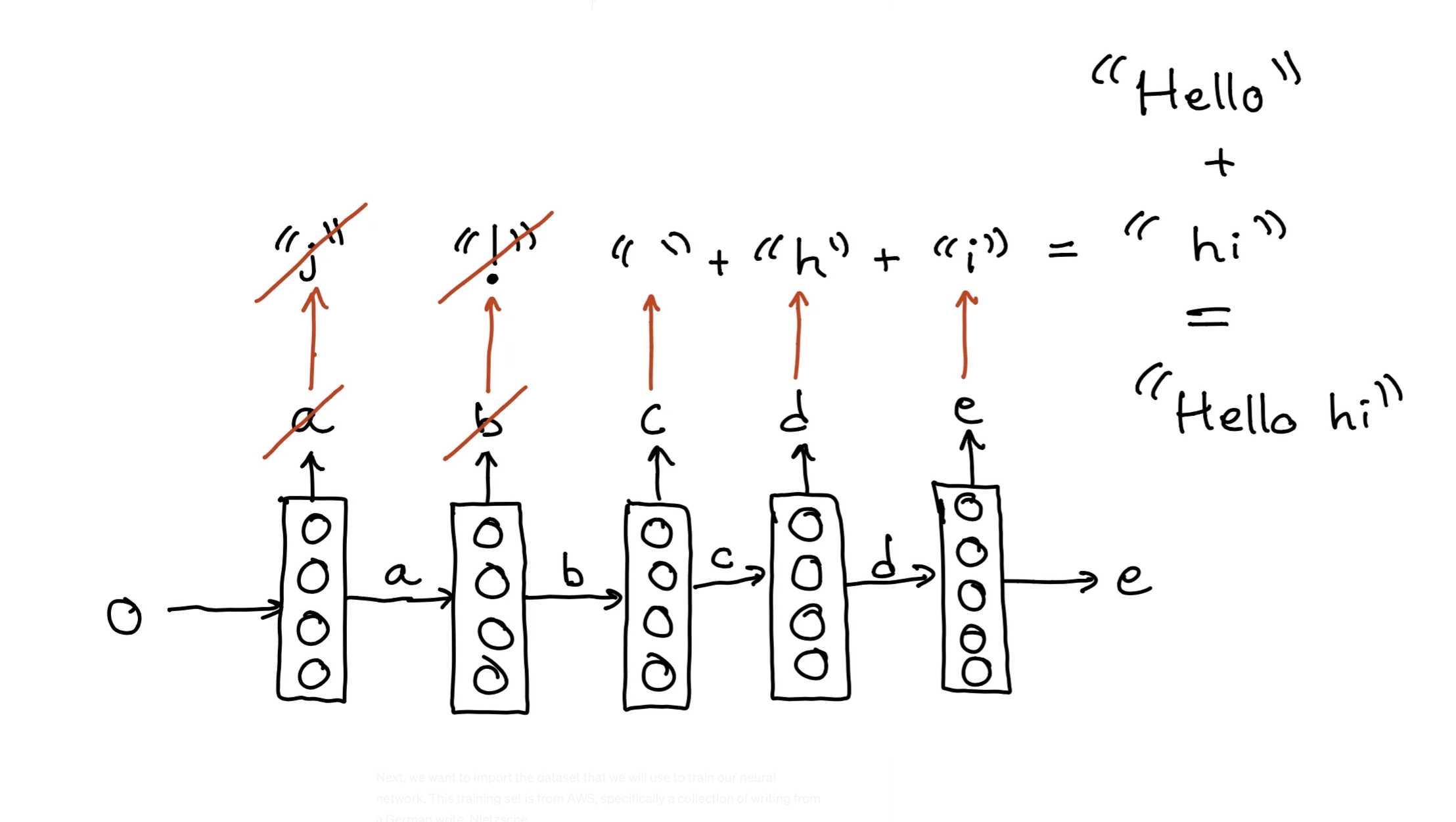

In this project, I built a LSTM Neural Network that would take in some text and output what it thought would be the following most logical string of text.

I created a 128-cycle LSTM recurrent neural network using Tensorflow and trained is off of Nietzche’s texts. In the end of the training, the LSTM was able to display a certian level of text understanding. Although it was not able to complete the given text examples with coherent sentences, it was apparent that the LSTM has some idea of how spaces, commas and periods worked. The outputs seemed to be somewhat random text separated by logical seeming spaces and punctuation.

The final version was 65 lines of code.